This post will be one of a series that I will try to blog all the way migrating my NSX V to T to share the issues and the best practices that could be helpful.

First, I think it would be a good idea to brief here about my platform and versions to give you a better idea about the current environment state and where am I trying to go ..

My current environment consists of bunch of ESXi hosts with vSphere 6.7u3 and NSX-V 6.4.6 which are managed by vCenter Server 6.7u3 and on top of that I have vCloud director version 10.1 which is abstracting all the underlying platform into tenants.

Yes, it’s a vCD platform and hence these post should be more interesting for the other VMware VCPP registered partners which I think NSX V to T migration is their number one topic for the time being to keep the vCloud verified badge and to keep their platform supported by VMware GSS. In the meantime, most of the posts should be useful to any body who runs VMware NSX-V and should be planning now a days to migrate to NSX-T.

VMware has already announced that NSX-V is going to end of life and they are urging all NSX-V customers to migrate to NSX-T before mid of January, 2022. link.

I’m trying to go for NSX-T 3.1 as the latest version for NSX-T at the time of writing this blog post and hence VMware is recommending to have at least NSX-V 6.4.8 as a minimum version if you want to use their NSX-V2T Migration tools which is created by VMware vCloud Director Business Unit.

I don’t want to go through the Migration tool in details but I would advise to keep checking the latest updates on my.vmware portal as they always post new versions with new enhancements and I would definitely recommend running the migration tool in assessment mode before you plan for the migration to give you a general idea about what can be migrated by the tool and like in my situation to give me the recommendation to upgrade my NSX-V to at least 6.4.8.

Alright then, enough with the intro 🙂 and let’s get into the topic of this post …

After verifying my versions interoperability with NSX-V 6.4.11, the target version of NSX-V were I decided to go for. I reviewed the upgrade procedure which pretty much straight forward and well documented on VMware documentation.

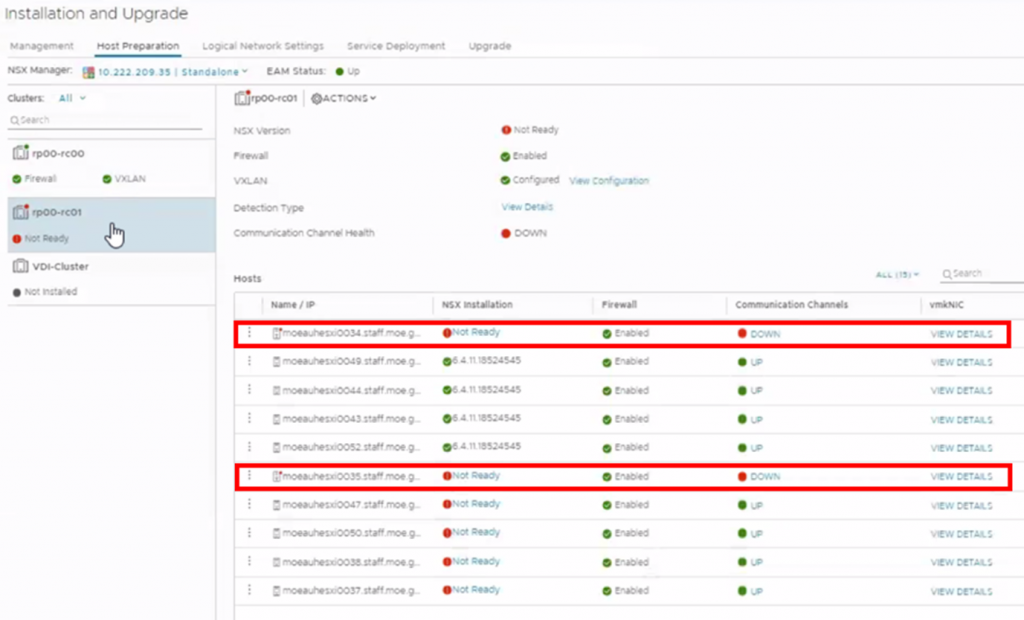

The upgrade was very smooth until I started to run into issues upgrading my ESXi hosts NSX-V VIBs.

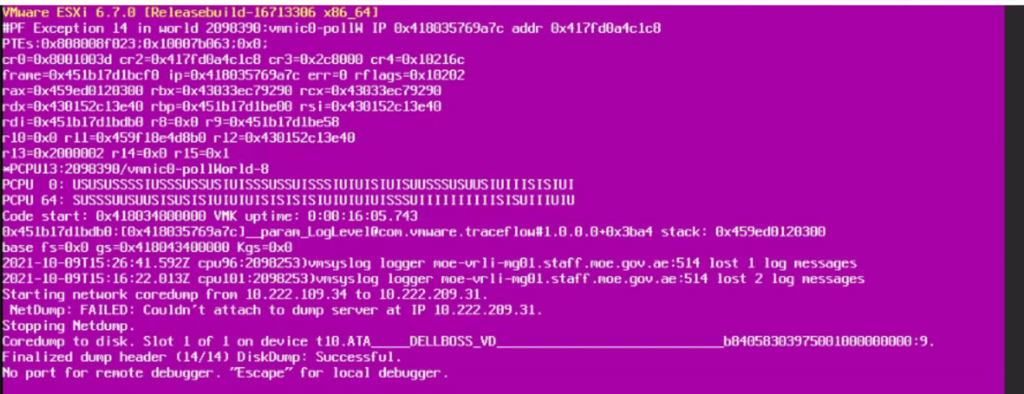

The issue was pretty annoying as the host was getting PSOD once vCenter EAM tries to Upgrade the NSX-V VIB. Reboots were not pretty much of help, because whenever I reboot the host after the PSOD, the host connects back to the vCenter Server and EAM right way tries to upgrade the NSX-V VIB and the host gets PSOD right away 🙁 …… that was a loop that I didn’t know at the beginning how to get out of it!!!

So my first challenge was to get out of this annoying EAM, PSOD loop to be able to troubleshoot this issue on the host!!

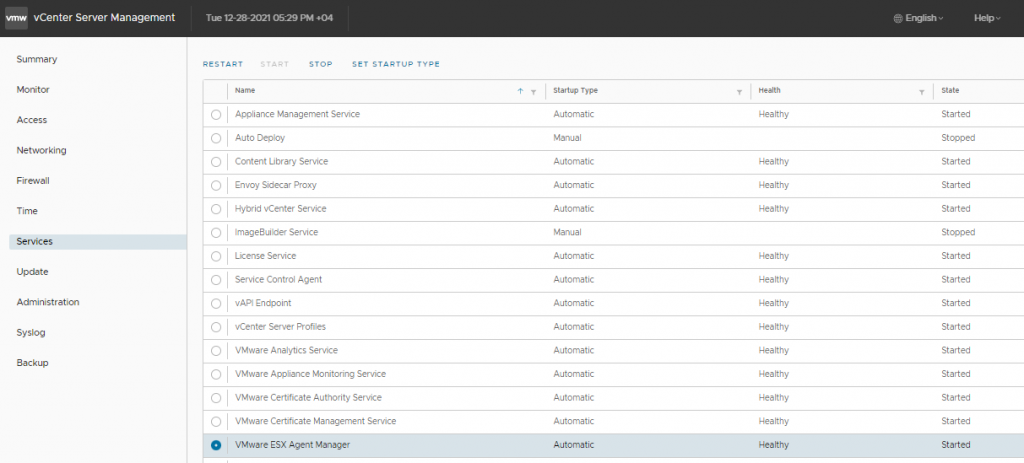

It doesn’t require a genius, but it took me some time until I thought about disabling the EAM service on the vCenter server. That’s basically going to pause the auto-update which was pushing the NSX-V VIB automatically whenever I reboot the host.

I disabled EAM service by logging to the vCenter Server appliance and stopping “VMware ESX Agent Manager”

Now, I was able to get some time to do some troubleshooting. well that’s what I thought at the beginning 🙂

after checking the logs on the ESXi host, I couldn’t find any useful logs other than the host is complaining about failing to upgrade the NSX-V VIB. that wasn’t as helpful as I expected :/

Anyways, I decided to Uninstall the NSX-V VIB and Install manually to see if this going to help.

I have written another blog post for how to remove NSXV VIB manually accessible from this Link.

Unfortunately, the manual procedure wasn’t that helpful 🙁

After running out of options and going through multiple articles on the internet recommending disabling VUM and IPFIX with no luck to fix my issue I ran into this VMware KB Article, the article was about having VRNI in your environment. YES. I do have VRNI in my environment and integrated with my NSX-V!! but how come that should be relevant to my issue!!

It turned out that VRNI’s Virtual Infrastructure Latency feature uses NSX enabled hosts’ BFD service to establish tunnels between hosts. The PSOD occurs when NSX kernel module is responding to a BFD tunnel detailed query from the control plane agent with all the BFD sessions’ states maintained by the kernel.

Fair enough, so I started going through the resolution section and disabled “Enable Virtual Infrastructure Latency” as per the KB article. But that wasn’t helpful in my case.

The seconded workaround using NSX API was helpful though and I was able to fix my issue when I tried it.

Use this option if the VRNI appliance is not accessible or if the Virtual Infrastructure Latency feature is enabled through NSX API

Use GET API to determine BFD status

GET /api/2.0/vdn/bfd/configuration/global

Response:

<bfdGlobalConfiguration>

<enabled>true</enabled>

<pollingIntervalSecondsForHost>180</pollingIntervalSecondsForHost>

<bfdIntervalMillSecondsForHost>120000</bfdIntervalMillSecondsForHost>

</bfdGlobalConfiguration>

Use PUT API to change BFD enable configuration

PUT /api/2.0/vdn/bfd/configuration/global

Request Body:

<bfdGlobalConfiguration>

<enabled>false</enabled>

<pollingIntervalSecondsForHost>180</pollingIntervalSecondsForHost>

<bfdIntervalMillSecondsForHost>120000</bfdIntervalMillSecondsForHost>

</bfdGlobalConfiguration>

I hope this post is useful for you if you run into the same PSOD situation and I’ll be back in more posts whenever I feel they are worth to share ..

Written by,

Mohamed Basha

Solutions Architect, Cloud and Datacenter.